Overview

The creation of a high performance computer facility is essential to the success of the project. Research in Crete is currently inhibited by the inability to perform computations on problems that demand serious computer power, such as those that demand bases with large number of states, large electromagnetic calculations, etc. The proposed computer facility will enable the researchers that work in the Center as well as various collaborators and visitors to advance their research.

The approach is inspired from recent and current HPC focus areas in academic HPC facilities, including:

• HPC operations excellence and best practice

• Application performance and benchmarking

• HPC storage expertise

• Graphics processing unit (GPU) computing

• HPC visualization

The core design is based on implementations from major academic HPC facilities around the world and the software infrastructure will be entirely based on open source code. The environment will be deployed either as CPU or as GPU compute nodes. Our intention is to provide both a conventional computing environment and GPU accelerated infrastructure, for high demanding parallel applications. All compute nodes will be setup as data-less, with just a small disk for temporary data, which will reduce the overall cost and elevate the flexibility at the same time.

The Metropolis Cluster uses the Simple Linux Utility for Resource Management (SLURM) which is an open-source workload manager. Before running any job, please consult the following sections of this guide:

- Software

- Storage

- Frequently Asked Questions

- Environmental modules

- The Batch Job Environemet

- SLURM Quick Start User Guide

Technical data

- Hardware:

- Calculated performance: ~ 25Tflops

- Compute Nodes: 50 IBM NextScale nx360m4 nodes with dual CPU E2670 v2, 10-core / CPU, 96GB RAM / node

- GP-GPU nodes: IBM NextScale 3650 m4 nodes equipped with NVIDIA Grid K2 and Intel Phi 3120P GP-GPU cards

- Available CPU Processing Cores/Threads: 1000/2000

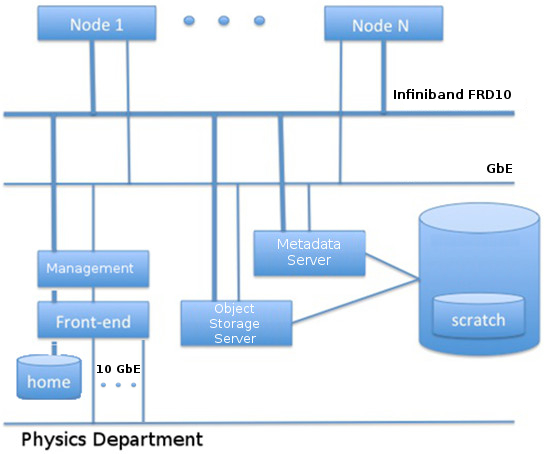

- Storage Capacity: Several IBM Storwize® V5000 enclosures providing 10TB of redundant storage plus 22TB of scratch space on BeeGFS

- Network: Mellanox Infiniband 4xFDR10, with non-blocking full-fat-tree configuration, GbE management network and 10GbE uplinks to Physics Department's network infrastructure

- Calculated performance: ~ 25Tflops

- Software:

- Operating System: Rocky Linux 8 64-bit

- Queuing System: SLURM

- Compilers:

- GNU C, C++, FORTRAN 90 & 77 (version 8.5.0 20210514 (Red Hat 8.5.0-10.1))

- Intel® oneAPI 2022.2

- Programming libraries: LAPACK, BLAS, ATLAS, MKL, Infiniband optimized libraries etc.

- Parallel execution environment: OpenMPI, Intel MPI

Detailed information here.

Contact

- Technical support: Kapetanakis Giannis [hpc AT physics.uoc.gr]